Don't Stop the Party! When Social Experience & Evaluation Converge

As part of the STEAM Factory’s commitment to interdisciplinary research and outreach, the Convergent Learning from Divergent Perspectives project (NSF AISL #1811119) brings together researchers from different disciplines, organized into theme-based cohorts to collaborate and share their research with public audiences. Our cohorts of researchers began their experience with multi-part training (modeled on Portal to the Public training) about science communication in informal learning settings. Since 2018, researchers have put this training into action by presenting pop-up demonstrations and “micro-lectures” at COSI After Dark and Franklinton Fridays, respectively. In their first presentations, researchers shared only their individual work. As they honed their presentation strategies and got to know the members of their cohorts, they also worked to create a collaborative High School <I/O> hackathon challenge and then identified strategies to present together on their themes in culminating team presentations.

But what did their audiences think? That’s where we come in. We work for COSI’s Center for Research and Evaluation, an interdisciplinary team of social scientists who study informal and nonformal learning experiences. Part of our role in the Convergent Learning project is to try to answer questions about what audiences take away from our researchers’ presentations, and what strategies and combinations of elements seem to make a difference. However, as we were developing instruments for data collection, we encountered another question: who wants to complete a questionnaire at a cocktail party or when they are exploring a science museum at night? The settings our researchers present in are challenging for us as evaluators because the social dimensions are important to the experience. While attendees expect to learn from our researcher cohorts, they are also often enjoying refreshments, socializing, and exploring active, exciting surroundings.

From the beginning of the project we used a tablet-based exit questionnaire, which has helped us document what public audiences encountered in researchers’ presentations, which researchers they saw, and what kind of change they could describe in their own STEM-related interest and knowledge, along with some basic demographics. While we were satisfied with the content of the questionnaires, the method did not work for every context. Exit questionnaires were very effective at reaching respondents at COSI After Dark, a large evening event that people experience at their own pace. They were harder to use at Franklinton Friday, where STEAM Factory programming is more intimate and structured. At Franklinton Fridays, we found that our questionnaire oversampled drop-in visitors, and undersampled visitors who stayed longer and participated in more synchronous experiences. Further, the questionnaire dampened an otherwise engaging event. In response, we adapted our method to 1) suit the event’s tone, 2) better sample those who experienced the micro-lectures, and 3) make evaluation a value-added experience.

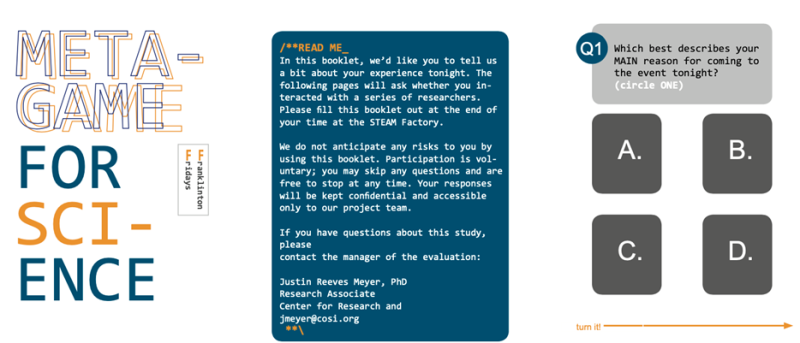

The Franklinton Friday Metagame was presented as a colorful workbook that reframed core questionnaire elements as a challenge experience to be completed during the event, rather than as another time input as people were trying to leave. We planned an evaluation-focused micro-lecture of our own, in which we would actually walk respondents through the workbook while sharing more information about why we asked certain questions and what we would do with the data. At the end of the experience, participants would receive feedback about their own responses (i.e., which areas showed the most change for them) and a token trading card that reflected their area of greatest interest.

Image: First three pages of the Franklinton Friday Metagame (design by R. F. Kemper).

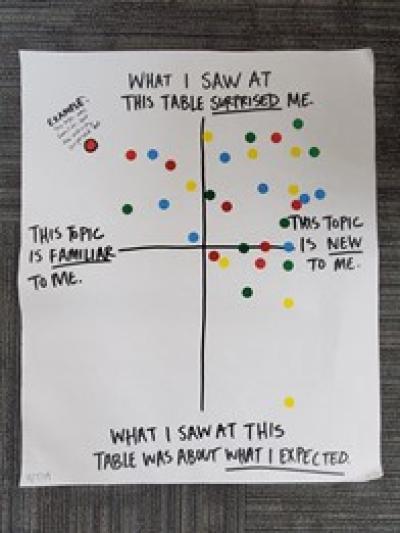

At COSI After Dark, we learned that we needed more information about the quality of visitor experiences at our researcher ‘pop-up’ tables. We added intercept, interactive activities that asked visitors how they felt about their experience, immediately after listening to or talking with one of our researchers at their ‘pop-up’ demonstrations. Using dot stickers, visitors marked how they felt about their experience on a large piece of paper. We as evaluators used the stickers as a fun, quick way to start a more in-depth conversation about their experience to pair with our exit-questionnaire data.

Photo: Interactive evaluation activity for COSI After Dark (design and photo by D. Hayde).

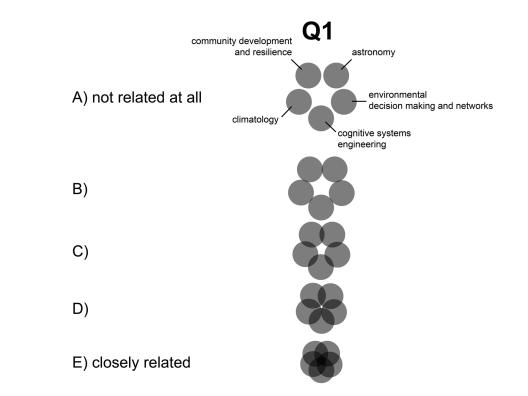

With the arrival of the COVID-19 pandemic, we needed to adapt again. As formerly in-person events changed to accommodate a virtual format, our evaluation methods followed suit. We transitioned to micro-lectures taking place over Zoom at the virtual editions of Columbus Science Pub and Franklinton Fridays. In evaluating these events, we used ‘pop-up,’ graphic polls to ask audience members about their perceptions of science topics before and after the Zoom presentations.

Image: Graphic poll questions used during virtual events (design by J. R. Meyer)

As this project has shown, flexibility can be an important part of conducting informal learning evaluation. Sometimes, it’s prompted by identifying that an evaluation instrument or method is just not working as intended. Less often, it’s by a global pandemic. In either case, you may need to adapt and revise your approach to better suit the context of the program and data collection. That said, it is still important to maintain rigor and consistency when studying the impact of programs. In situations where researchers might have to adapt methods into a variety of formats, we recommend using questions that can be easily repeated across different measures and identifying potential areas of experience that might be comparable even across different formats. We also encourage others working in this space to thinking holistically about participant experiences and their accompanying data. The most important part: always be sure to have fun!

Justin Reeves Meyer, Ph.D. (Co-PI, Convergent Learning from Divergent Perspectives project)

Justin is a researcher, designer, and musician, and has over 10 years of experience working with arts/cultural institutions. Focusing on the interactions between nonprofit institutions, communities, and urban space, Justin’s research uses econometrics and geographic, spatial analysis methods to help inform program and policy decisions. His research has been published in top tier urban policy and urban planning journals, and featured in museum studies and neighborhood planning books. In addition to his work as a researcher, Justin also has experience as a graphic and urban designer, having worked for environmental and architectural design firms, as well as interned with the City of New York’s Urban Design Division. As a professional classical singer, Justin has performed with several Grammy and Gramophone winning ensembles and recorded seven critically acclaimed discs on the Hyperion label.

Email: jmeyer@cosi.org

Donnelley (Dolly) Hayde, M.A. (Senior Personnel, Convergent Learning from Divergent Perspectives project)

Dolly has a strong track record in the study of informal learning and over a decade of experience as an applied social scientist and museum professional. In her project work, Dolly frequently collaborates with teams to incorporate meaningful, end-to-end planning and evaluation processes that meet the demands of real-life practice. In particular, she specializes in developing context-sensitive methods that incorporate play and experience design into the process of data collection, and she loves helping teams “make it work” when it comes to systematically gathering information in tricky settings. Her current research interests include cultural alignment in museum experiences, constructed challenge in interactivity, and the role of cultural and social capital in informal learning.

Email: dhayde@cosi.org

Laura Weiss, M.A. (Evaluator, Convergent Learning from Divergent Perspectives project)

Laura brings a strong background in evaluation, audience research, and informal learning. She holds a B.A. in History from the University of Illinois Springfield and an M.A. in Museum Studies from Indiana University-Purdue University Indianapolis. Prior to joining the Center for Research and Evaluation, Laura conducted research and evaluation at a variety of institution types – two zoos, a children’s museum, and an art museum. She has experience in every stage of the evaluation process from front-end interviews to interactive prototyping to summative evaluation for programs and exhibits. She enjoys thinking of creative ways to collect data and share findings.

Email: lweiss@cosi.org